If our goal is to make the world a better place to live with the help of AI, it has to scale, and for technologies to scale, they need to get cheaper, sustainable, and more viable for adoption.

Enterprises today stand at the brink of a transformative opportunity. In 2017, only 20% of organizations had adopted AI. But in just a few years, that figure has more than doubled – over 50% of enterprises across the globe leverage AI across their operations. And the race to harness this powerful technology shows no signs of slowing.

The golden rush to adopt AI for enterprises comes from recent advancements in synthetic data, cloud technologies, and foundational AI models. Today, integrating AI is within reach for enterprises across various industries.

The future of AI leaders lies in understanding the tech stack available to us today. Coupled with enabling widespread enterprise-level adoption of those capabilities, the impact we can achieve with AI is unprecedented.

This blog explores what steps enterprises can take to adopt upcoming and brewing AI capabilities seamlessly.

We all know the importance of good product onboarding as a digital product stakeholder. For example, how quickly you can leverage a new feature in your favorite app can define business success. Apply the same ideology to AI for a minute, and we start to see a similar pattern.

For AI to achieve the required scale of widespread adoption, its applications must extend beyond small-scale uses and deliver solutions that are not only innovative, but also easy to implement, especially for the enterprise ecosystem.

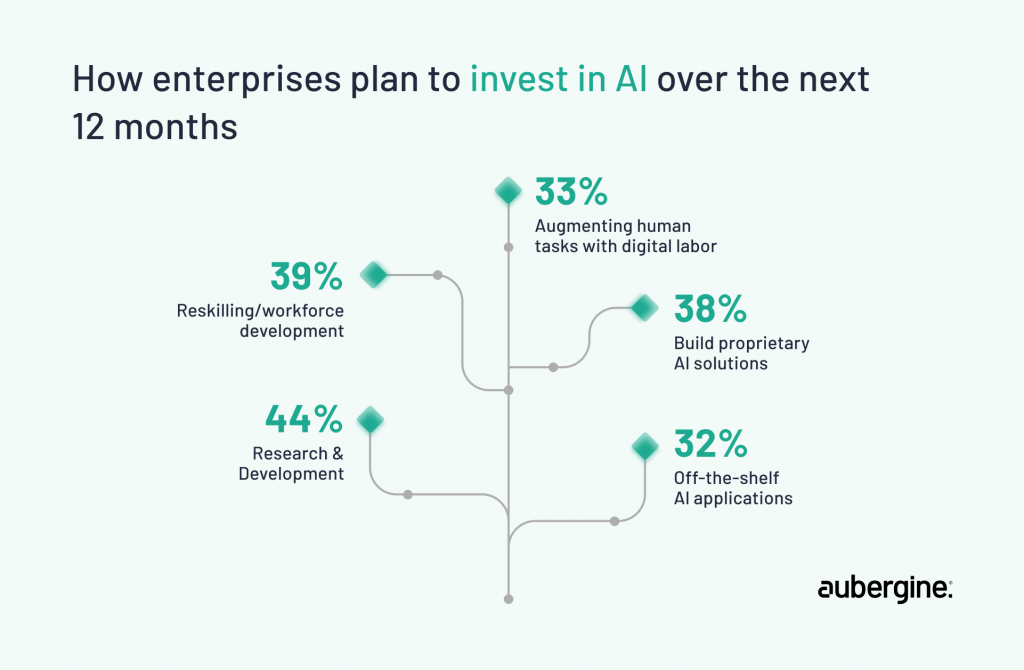

According to the IBM Global AI Adoption Index 2023, enterprise adoption of AI is due to widespread deployment by early adopters, but barriers remain. About 40% of enterprises globally are still in the exploration and experimentation phases.

However, organizations in India (59%), the UAE (58%), Singapore (53%), and China (50%) are leading the way in the active use of AI, compared with lagging markets like Spain, Australia, and France.

At a time when AI is unlocking new frontiers of scientific discoveries by analyzing complex data at scale, it’s time for technology pioneers to start thinking creatively about leveraging the power of AI.

It’s also time for creators of AI (all the different layers and modalities) to realize that the winners will be the solutions that integrate more seamlessly with the real world.

Optimizing AI for enterprises creates a shared profit from which many stakeholders (including users, governments, and businesses) will benefit.

Enterprises are investing in new hardware like specialized AI chips and edge devices to power their AI applications. However, enterprises can no longer take a rigid, long-term approach to infrastructure – they need to future-proof their technology foundations to keep up with the rapid evolution of AI and unlock its full potential.

“We’re seeing that the early adopters who overcame barriers to deploy AI are making further investments, proving to me that they are already experiencing the benefits from AI. More accessible AI tools, the drive for automation of key processes, and increasing amounts of AI embedded into off-the-shelf business applications are top factors driving the expansion of AI at the enterprise level”

Rob Thomas, Senior Vice President, IBM Software.

A modular enterprise architecture allows for the standardization of interfaces among business processes and services, enabling plug-and-play components that can be used to meet changing business demands.

Enterprises are beginning to prefer modular architectures because its adoption allows for greater flexibility, scalability, and ethical governance in technology solutions.

Historically, customizing various components within the Machine Learning lifecycle has been a cumbersome and manual task, often requiring a ‘Do-It-Yourself’ approach.

This chapter from O’Reilly explores the intricacies of building Machine Learning Pipelines, highlighting the need for orchestration tools to manage these complexities.

While feasible for data science experts, this customization level presents a challenge for most businesses that prefer a degree of separation from the intricate details of model development.

These organizations tend to lean towards solutions that offer control over the modeling process without necessitating a deep dive into the technicalities of each step.

This trend shows a clear shift: Enterprise AI products are moving beyond the desire for seamlessly integrated, one-size-fits-all AI systems.

Instead, there’s a growing demand for the ability to fine-tune and customize every aspect of AI models and their development processes to more precisely meet specific needs.

Goldman Sachs has tackled the challenge of making machine learning customization simpler and more manageable.

In the finance sector, where precise financial models and compliance with regulations are critical, they’ve shifted towards using a modular approach for their AI and ML developments.

This means breaking down complex systems into smaller parts that can be updated or tested independently. This speeds up the process and makes it easier to keep up with market changes or new regulations.

This approach is practical for a fast-moving field like finance, allowing for quicker adjustments and less downtime when making necessary updates.

That said, it would be premature to declare a definitive move away from interdependent architectures in favor of modular solutions. The reality is more nuanced, with modular and integrated AI platforms finding their own place within the ecosystem.

The industry is steering towards a balanced approach, blending the strengths of both architectures.

The AI evolution is following a similar path to the early days of the internet, showing how history often repeats itself with new technologies. When the internet first started, most of the money and attention went into building its foundation.

Think of companies like Cisco, which made the equipment to connect networks. As the internet’s infrastructure matured, the focal point of innovation and investment shifted towards the application layer, where software and services directly interact with users.

This is when tech giants rose to fame – Google, which shaped how we find information; Amazon, which transformed retail; and Facebook, which redefined social interaction.

In the early days of AI, much like the initial phase of the internet, the emphasis was building the necessary infrastructure to support AI’s computational demands. This involved significant investments in high-performance computing systems, data storage solutions, and the networking capabilities required to efficiently process and analyze vast amounts of data.

Companies like Nvidia became pivotal during this phase, developing GPUs that significantly accelerated AI computations. These hardware components were crucial for training increasingly complex AI models, laying the groundwork for today’s AI advancements.

A standout example of a company that successfully navigated this shift is Palantir. Founded with a focus on data integration and analytics, Palantir invested heavily in developing robust data processing and analysis capabilities, which could be part of the foundational layer of AI technology.

However, as their infrastructure matured, Palantir pivoted towards creating sophisticated software applications that leverage this infrastructure.

Their platforms, Gotham and Palantir Foundry, are now used across various sectors for data-driven decision-making, from defense and intelligence to healthcare and finance.

It’s crucial to recognize that the journey from infrastructure to application layer investment isn’t a simple transition but a nuanced expansion of focus.

The reality is that advancements in AI applications often drive new requirements for infrastructure, just as innovations in infrastructure open up possibilities for application development.

While the GenAI tech stack is diverse, the heart of it is the AI Model layer. This is where the algorithms sit that can analyze data, learn from it, and make predictions or decisions based on that data.

Foundational models trained on massive amounts of heterogeneous data from various sources and modalities are general models. ChatGPT is one of them.

Hyperlocal AI models are designed to serve specific communities, regions, or cultural groups, embedding a deep understanding of local nuances, languages, and behaviors.

This approach is especially critical in complex industries like healthcare, where the stakes are high, and the demand for precision and cultural sensitivity is paramount.

Hyperlocal models in healthcare can lead to more accurate diagnoses, personalized treatment plans, and better patient outcomes by considering genetic, environmental, and cultural factors influencing health.

Zipline‘s use of hyperlocal AI models in healthcare logistics offers a great success story. Zipline, a drone delivery service, focuses on delivering medical supplies, including blood, vaccines, and medication, to remote areas in countries like Rwanda and Ghana.

By leveraging AI models incorporating hyperlocal data, such as geographic information, weather patterns, and local healthcare needs, Zipline ensures timely and efficient delivery of critical healthcare supplies.

This AI-driven approach optimizes delivery routes and schedules based on local conditions and predicts demand for medical supplies in different regions. This ensures that stocks are replenished before a crisis occurs.

The transition towards hyperlocal AI models, particularly in a nuanced field like healthcare, underscores the maturation of AI technologies and their increasing alignment with real-world needs.

While general AI models lay the groundwork by providing a broad base of knowledge and capabilities, the evolution towards hyperlocal models represents a refinement of this technology, aiming to deliver more targeted, effective, and culturally and environmentally aware solutions that account for real life nuances.

However, developing these models requires a deep understanding of local contexts and access to diverse and comprehensive datasets.

The initial allure of LLMs like GPT-4 lies in their ability to find patterns within massive datasets without explicit labeling. This served as a groundbreaking starting point for understanding and generating human-like text.

However, the industry increasingly recognizes the need for more sophisticated data vetting, refinement, and fine-tuning approaches to achieve optimal outcomes.

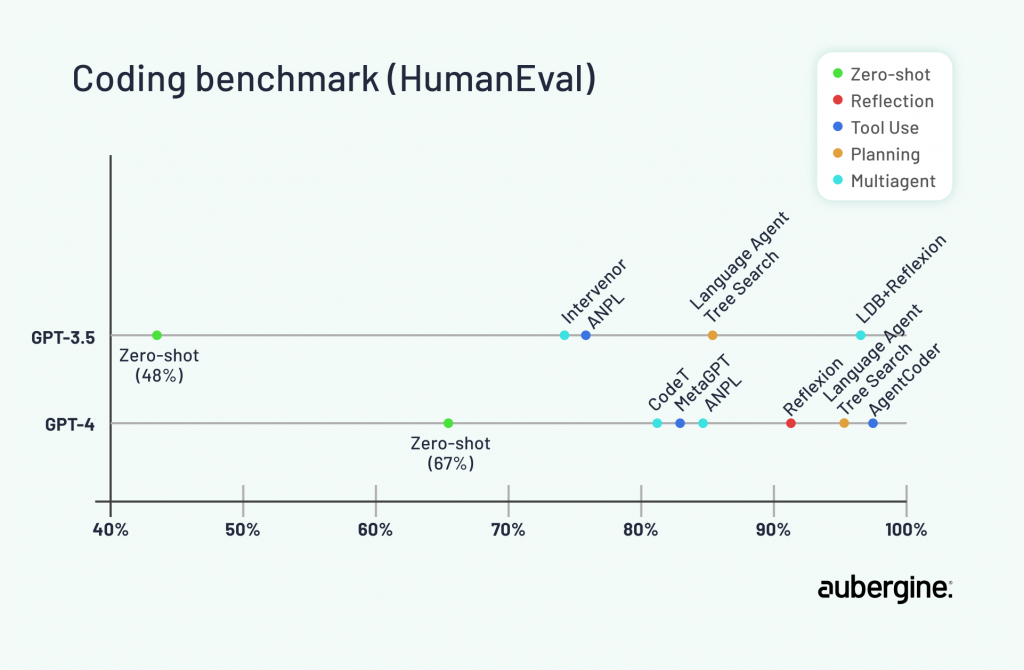

Emerging innovations are now pointing towards the development of smaller, self-teaching models. These models promise to rival and potentially surpass their larger counterparts’ capabilities by leveraging the principles of agentic workflows and iterative learning, as highlighted in the insights from Dr. Andrew Ning‘s discussion.

Computer scientist Dr. Andrew Ng led a recent deep dive into this idea – also the co-founder and head of Google Brain, former chief scientist of Baidu, and a leading mind in artificial intelligence.

He spoke at Sequoia Capital’s AI Ascent about what’s next for AI agentic workflows and their potential to significantly propel AI advancements—perhaps even surpassing the impact of the forthcoming generation of foundational models.

Source: Dr Ng

The concept of AI agents working within these workflows suggests a paradigm shift where AI can engage in self-improvement through a series of iterative tasks, reflective thinking, and collaboration with other agents.

Designed to emulate the complex reasoning abilities of larger models with a fraction of the data consumption, Microsoft’s Orca showcases the potential of progressive learning techniques.

By iteratively learning from smaller, carefully curated datasets and employing teaching assistance to guide its learning process, Orca can achieve similar or even superior levels of reasoning and understanding compared to its larger counterparts.

This not only makes AI more accessible by reducing the need for vast data but also opens up new possibilities for AI applications in environments where data availability is limited or where privacy concerns limit the use of large datasets.

We are making AI more independent and capable of learning from its experiences, much like a group of experts solving a problem. This development leads to AI that not only learns and adapts more effectively but also delivers more sophisticated and tailored results.

While the journey of integrating these self-teaching models into practical applications is still in its early stages, their potential for transforming AI usability, accessibility, and performance is immense.

As the industry continues to explore and refine these models, the focus will likely shift towards optimizing their learning processes, ensuring ethical use of data, and expanding their applicability across diverse sectors.

To harness the full spectrum of AI’s transformative power, it must move beyond limited use cases to become a staple in broad, enterprise-wide strategies. This requires the creation and deployment of solutions that not only break new ground in innovation but are also straightforward to implement.

Therefore, enterprise business leaders are responsible for thinking outside the box in utilizing AI’s vast capabilities effectively.

At the same time, AI developers, whether part of nimble startups or established giants, are tasked with crafting technologies that blend seamlessly into the fabric of everyday operations.

This dual approach ensures AI’s revolutionary benefits are fully accessible and applicable across the business landscape, calling a new era of intelligent digital solutions.

In these evolving times, Aubergine Solutions is an ally for enterprise businesses aiming to implement AI seamlessly and effectively. Our robust experience in UX development and software design, coupled with our team of in-house AI experts, enables us to craft tailored AI integrations that not only align with operational workflows but also propel your enterprise towards substantial growth and innovation.

Our commitment is to bridge the gap between cutting-edge AI technology and practical, scalable applications for businesses.